Nexa SDK enables developers to ship any AI model to any device in minutes, providing production-ready on-device inference across various backends. It supports state-of-the-art (SOTA) models and offers a range of features that enhance the deployment and performance of AI applications.

Nexa SDK | Deploy any AI model to any device in minutes.

Nexa SDK simplifies the deployment of LLMs, multimodal, ASR, and TTS models on mobile devices, PCs, automotive systems, and IoT. It is fast, private, and ready for production on NPU, GPU, and CPU.

Introduction

Feature

-

Model Hub

Nexa SDK provides access to a diverse range of AI models, including multimodal models that understand text, images, and audio.

-

On-Device Inference

The SDK allows for production-ready on-device inference, ensuring that AI models can run efficiently on various hardware platforms.

-

Support for Multiple Backends

Nexa SDK supports various backends, including Qualcomm NPU, Intel NPU, and others, enabling developers to optimize performance based on the target device.

-

NexaQuant Compression

The proprietary NexaQuant compression method reduces model size by up to 4X without sacrificing accuracy, making it suitable for mobile and edge devices.

-

Rapid Prototyping

Developers can quickly test models using the Nexa CLI, which allows for local OpenAI-compatible API setup in just three lines of code.

-

Cross-Platform Compatibility

The SDK is designed to integrate seamlessly into applications across multiple operating systems, including Windows, macOS, Linux, Android, and iOS.

How to Use?

- Explore the Model Hub to find the right AI model for your application needs.

- Utilize NexaQuant to optimize your models for mobile and edge deployment.

- Test your models using the Nexa CLI for rapid prototyping and development.

- Ensure compatibility with your target device by selecting the appropriate backend (NPU, GPU, or CPU).

- Keep an eye on updates and new models added to the Nexa SDK to leverage the latest advancements in AI technology.

FAQ

What is Nexa SDK?

Nexa SDK is a software development kit that allows developers to deploy AI models on various devices quickly and efficiently, providing on-device inference capabilities.

How does Nexa SDK support different AI models?

Nexa SDK supports a wide range of AI models, including state-of-the-art models optimized for different hardware backends, ensuring flexibility and performance.

Can I use Nexa SDK for real-time applications?

Yes, Nexa SDK is designed for real-time applications, providing fast and efficient on-device inference suitable for various use cases.

What platforms does Nexa SDK support?

Nexa SDK supports multiple platforms, including Windows, macOS, Linux, Android, and iOS, allowing for broad application development.

How does NexaQuant improve model performance?

NexaQuant uses a proprietary compression method to reduce model size while retaining accuracy, making it ideal for deployment on resource-constrained devices.

Price

- Free plan: $0/month

- Basic plan: $9.99/month

- Standard plan: $19.99/month

- Professional plan: $49.99/month

The price is for reference only, please refer to the latest official data for actual information.

Evaluation

- Nexa SDK excels in providing a user-friendly interface for deploying AI models across various devices, making it accessible for developers of all skill levels.

- The support for multiple backends and the ability to optimize models for specific hardware enhances its versatility.

- The NexaQuant compression technology is a significant advantage, allowing for efficient use of resources without compromising performance.

- However, the complexity of some advanced features may require a learning curve for new users, particularly those unfamiliar with AI model deployment.

- Continuous updates and model additions are essential to maintain competitiveness in the rapidly evolving AI landscape.

Related Websites

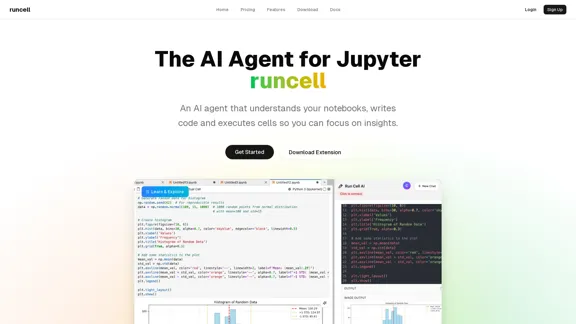

Runcell is an AI agent for Jupyter that can automate writing code, executing cells, debugging, and even explaining results while you observe.

7.29 K

Create web applications using Unshift's drag-and-drop builder designed for contemporary JavaScript frameworks. Export production-ready, fully-typed code without any vendor lock-in.

0

Vibe Coding Platform - Your Gateway to Learning Code

Vibe Coding Platform - Your Gateway to Learning CodeThe ultimate vibe coding platform where Claude Code is directly connected to cloud hosting. Get instant public URLs and code from anywhere, including your phone.

0

TraeAI - Trae - Accelerate Your Shipping with Trae

TraeAI - Trae - Accelerate Your Shipping with TraeTrae is an adaptive AI IDE that changes the way you work, collaborating with you to operate more quickly.

2.49 M

Undetectable AI for Free - Bypass AI Detectors Instantly | PassMe.ai

Undetectable AI for Free - Bypass AI Detectors Instantly | PassMe.aiTry the undetectable AI bypasser of PassMe.ai and bypass AI detectors of any kind. PassMe can make any AI text undetectable with a 98%+ effectiveness.

13.88 K