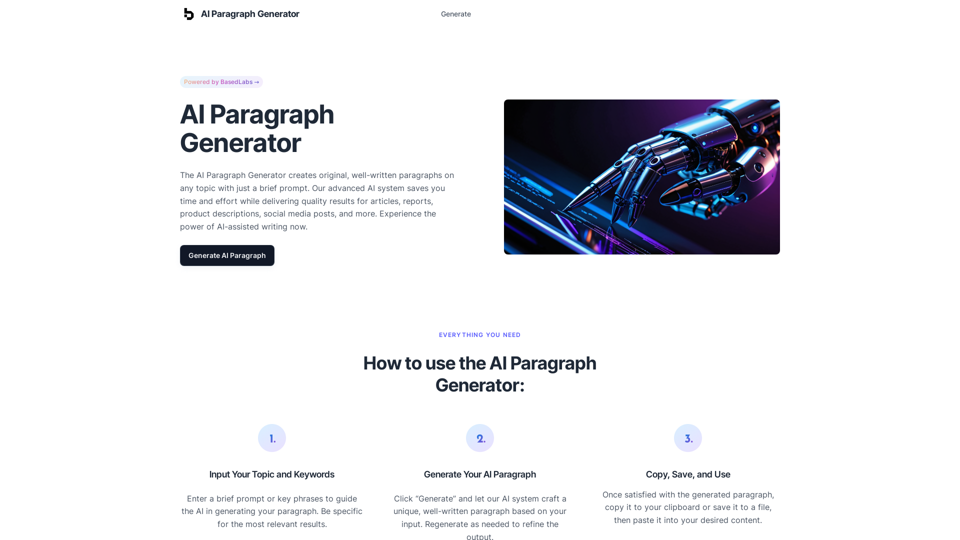

AI Paragraph Generator is an innovative tool that leverages artificial intelligence to create original, high-quality paragraphs on any topic. With just a brief prompt, users can generate well-written content for various purposes, including articles, reports, product descriptions, and social media posts. This powerful AI-driven system is designed to save time and effort while delivering consistent, professional results.

AI Paragraph Generator – Quickly generate paragraphs on any topic with our state-of-the-art AI Paragraph Generator

AI Paragraph Generator – Quickly generate paragraphs on any topic with our state-of-the-art AI Paragraph Generator

Introduction

Feature

Input Your Topic and Keywords

Users can easily start the process by entering a brief prompt or key phrases to guide the AI in generating relevant paragraphs. For optimal results, it's recommended to be specific with the input.

Generate Your AI Paragraph

With a simple click of the "Generate" button, the advanced AI system crafts a unique, well-written paragraph based on the provided input. Users have the flexibility to regenerate content as needed to refine the output.

Copy, Save, and Use

Once satisfied with the generated paragraph, users can quickly copy it to their clipboard or save it to a file. The content is then ready to be pasted into any desired document or platform.

Save Time and Boost Productivity

The AI Paragraph Generator offers:

- Streamlined writing process

- Increased efficiency in content creation

Overcome Writer's Block and Generate Fresh Ideas

Users benefit from:

- Creative inspiration for their writing

- New angles and perspectives on topics

Ensure Consistency and Quality

The tool provides:

- Consistent writing style across all generated content

- Professional output suitable for various applications

FAQ

How does the AI Paragraph Generator work?

The AI Paragraph Generator utilizes advanced artificial intelligence to create unique paragraphs based on user input. It analyzes the provided prompt and generates original content tailored to the specified topic.

Is the generated content original?

Yes, the AI creates brand new, original paragraphs each time you use the generator. Every output is unique and crafted specifically for your input.

Is there a limit to how many paragraphs I can generate?

No, there is no limit to the number of paragraphs you can generate. Users are free to create as many paragraphs as they need for their projects or content requirements.

Related Websites

This extension changes the links for images and YouTube videos in ChatGPT or GPT messages into tags that show the images and videos directly.

193.90 M

Please provide me with the text you'd like me to summarize and translate. I'm ready! 😊

Please provide me with the text you'd like me to summarize and translate. I'm ready! 😊I can help you with that! Just paste the web page URL here, and I'll use Claude's powerful abilities to: * Summarize the main points of the page in clear, concise language. * Translate the entire page into English for you. Let me know if you have a page you'd like me to work on!

193.90 M

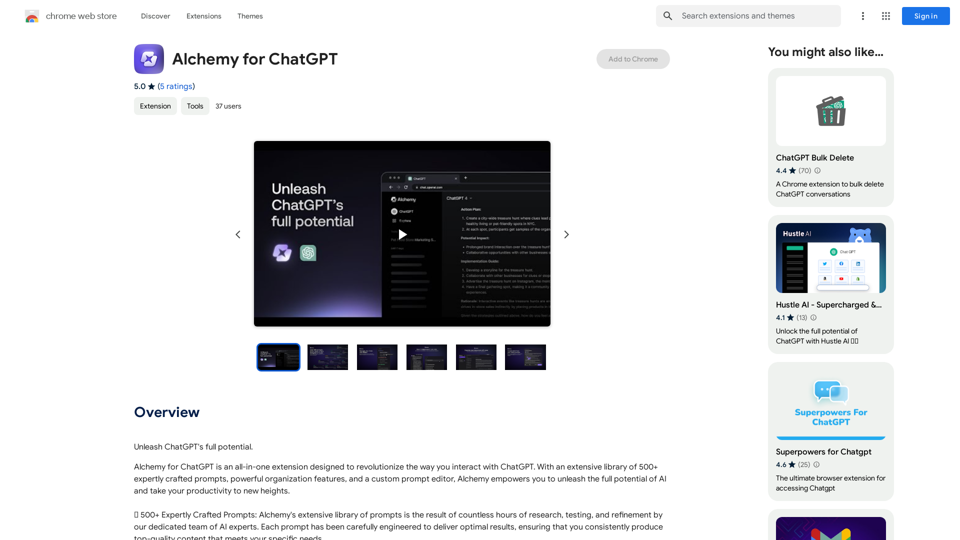

Alchemy for ChatGPT Introduction Alchemy is a powerful framework for building and deploying large language models (LLMs) like ChatGPT. It provides a comprehensive set of tools and resources to streamline the entire LLM development lifecycle, from training to deployment and monitoring. Key Features * Modular Design: Alchemy is built with a modular architecture, allowing developers to easily customize and extend its functionality. * Fine-Tuning Capabilities: Alchemy offers robust fine-tuning capabilities, enabling users to adapt pre-trained LLMs to specific tasks and domains. * Deployment Flexibility: Alchemy supports deployment across various platforms, including cloud, on-premise, and edge devices. * Monitoring and Evaluation: Alchemy provides tools for monitoring LLM performance and evaluating their effectiveness. Benefits * Accelerated Development: Alchemy's modularity and pre-built components significantly reduce development time and effort. * Improved Accuracy: Fine-tuning capabilities allow for higher accuracy and performance on specific tasks. * Scalability and Reliability: Alchemy's deployment flexibility ensures scalability and reliability for diverse applications. * Cost-Effectiveness: Alchemy's efficient resource utilization and streamlined workflows contribute to cost savings. Use Cases Alchemy can be used in a wide range of applications, including: * Chatbots and Conversational AI: * Text Generation and Summarization: * Code Generation and Debugging: * Data Analysis and Insights: * Personalized Learning and Education: Conclusion Alchemy is a powerful and versatile framework that empowers developers to build, deploy, and manage LLMs effectively. Its comprehensive features, benefits, and diverse use cases make it an ideal choice for organizations looking to leverage the transformative potential of LLMs.

Alchemy for ChatGPT Introduction Alchemy is a powerful framework for building and deploying large language models (LLMs) like ChatGPT. It provides a comprehensive set of tools and resources to streamline the entire LLM development lifecycle, from training to deployment and monitoring. Key Features * Modular Design: Alchemy is built with a modular architecture, allowing developers to easily customize and extend its functionality. * Fine-Tuning Capabilities: Alchemy offers robust fine-tuning capabilities, enabling users to adapt pre-trained LLMs to specific tasks and domains. * Deployment Flexibility: Alchemy supports deployment across various platforms, including cloud, on-premise, and edge devices. * Monitoring and Evaluation: Alchemy provides tools for monitoring LLM performance and evaluating their effectiveness. Benefits * Accelerated Development: Alchemy's modularity and pre-built components significantly reduce development time and effort. * Improved Accuracy: Fine-tuning capabilities allow for higher accuracy and performance on specific tasks. * Scalability and Reliability: Alchemy's deployment flexibility ensures scalability and reliability for diverse applications. * Cost-Effectiveness: Alchemy's efficient resource utilization and streamlined workflows contribute to cost savings. Use Cases Alchemy can be used in a wide range of applications, including: * Chatbots and Conversational AI: * Text Generation and Summarization: * Code Generation and Debugging: * Data Analysis and Insights: * Personalized Learning and Education: Conclusion Alchemy is a powerful and versatile framework that empowers developers to build, deploy, and manage LLMs effectively. Its comprehensive features, benefits, and diverse use cases make it an ideal choice for organizations looking to leverage the transformative potential of LLMs.Unlock the full capabilities of ChatGPT.

193.90 M