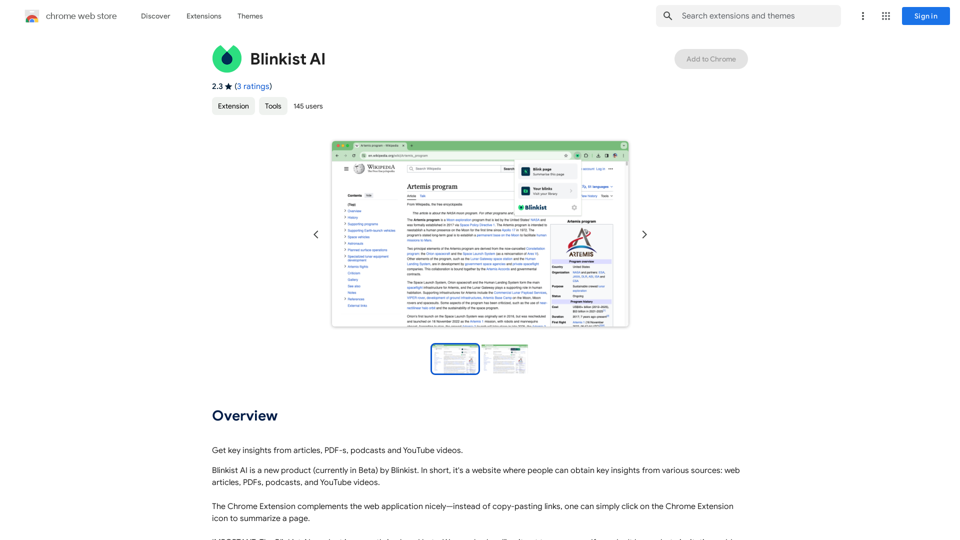

Blinkist AI is an innovative tool designed to enhance information consumption and productivity. It offers a Chrome Extension that allows users to quickly summarize web pages, articles, PDFs, podcasts, and YouTube videos with a single click. This AI-powered solution aims to save time and improve understanding by providing key insights from various sources. Currently in closed beta, Blinkist AI is gradually rolling out to new users, offering a free Chrome Extension with subscription options for extended access.

Blinkist AI

Extract the most important information from articles, PDFs, podcasts, and YouTube videos.

Introduction

Feature

Versatile Content Summarization

Blinkist AI can extract key insights from a wide range of content types, including:

- Web articles

- PDF documents

- Podcasts

- YouTube videos

One-Click Summarization

The Chrome Extension enables users to summarize any web page instantly with a single click, streamlining the process of information gathering.

Time-Saving and Productivity Boost

By providing quick summaries and key insights, Blinkist AI helps users:

- Save valuable time

- Enhance productivity

- Improve understanding of complex topics

Flexible Pricing Model

Blinkist AI offers:

- Free Chrome Extension for basic use

- Subscription options for extended access and features

User-Friendly Interface

The tool is designed for ease of use:

- Add the Chrome Extension to your browser

- Click the extension icon to summarize a page

- Instantly receive key insights from the summarized content

FAQ

What types of content can Blinkist AI summarize?

Blinkist AI can summarize various content types, including:

- Web articles

- PDF documents

- Podcasts

- YouTube videos

How do I start using Blinkist AI?

To begin using Blinkist AI:

- Add the Blinkist AI Chrome Extension to your browser

- Click on the extension icon when you want to summarize a page

- Review the key insights provided by the AI

Is Blinkist AI completely free to use?

The pricing structure for Blinkist AI is as follows:

- The Chrome Extension is free to use

- A subscription is required for extended access beyond the free usage limits

What are the main benefits of using Blinkist AI?

The key benefits of using Blinkist AI include:

- Time-saving through quick access to key insights

- Improved understanding of complex topics

- Enhanced productivity with efficient information processing

Is Blinkist AI available to everyone?

Currently, Blinkist AI is in a closed beta phase. New users are being added gradually as the product continues to develop and improve.

Latest Traffic Insights

Monthly Visits

193.90 M

Bounce Rate

56.27%

Pages Per Visit

2.71

Time on Site(s)

115.91

Global Rank

-

Country Rank

-

Recent Visits

Traffic Sources

- Social Media:0.48%

- Paid Referrals:0.55%

- Email:0.15%

- Referrals:12.81%

- Search Engines:16.21%

- Direct:69.81%

Related Websites

AnkAI is the best tool for easily turning PDFs into Anki flash cards, making your studying process faster and simpler.

0

Create original AI images from any page on the web Introducing Imagifi from Easyfi.ai, your personal AI image generator! With…

193.90 M

Wallow To roll or move about in a lazy, relaxed way, often in a pleasant or enjoyable manner.

Wallow To roll or move about in a lazy, relaxed way, often in a pleasant or enjoyable manner.Wallow streamlines digital product development with real-time incident tracking, team alignment, and integrated communication tools. Experience seamless collaboration and boost productivity with Wallow.

847

A medical assistant for primary care physicians, transcribes patient visits and automatically creates medical records, improving patient care and service.

193.90 M

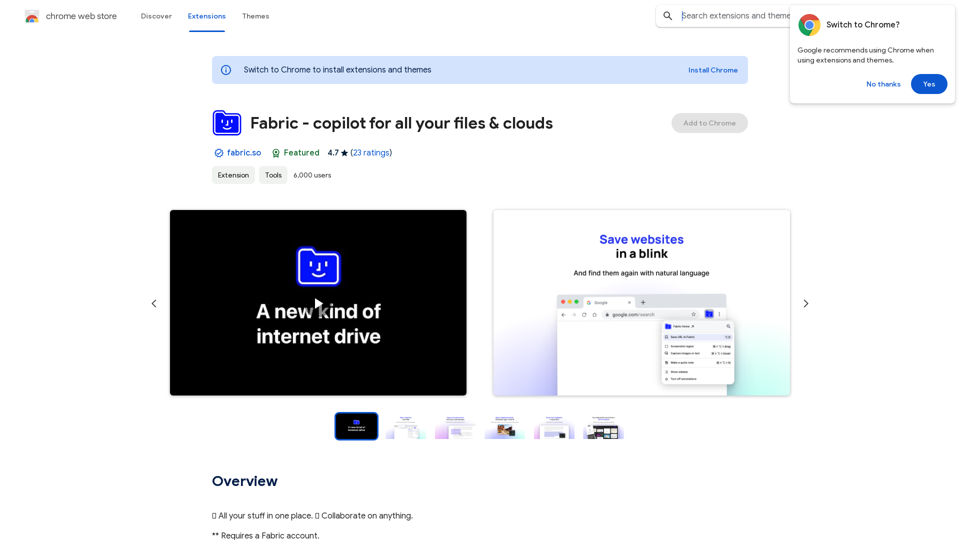

Fabric - Your assistant for all your files and cloud storage.

Fabric - Your assistant for all your files and cloud storage.🍱 All your things in one place. 👋 Work together on anything.

193.90 M