Langtrace AI is an open-source observability tool designed for monitoring, evaluating, and improving Large Language Model (LLM) applications. It offers a comprehensive suite of features to enhance the performance and security of LLM-based systems, making it an essential tool for developers and organizations working with AI language models.

Langtrace AI

Discover Langtrace, the comprehensive tool for monitoring, evaluating, and optimizing large language models. Enhance your AI applications with real-time insights and detailed performance metrics.

Introduction

Feature

Advanced Security and Compliance

- SOC 2 Type II certified cloud platform

- Self-hosting option for enhanced control

- OpenTelemetry standard traces support

- No vendor lock-in, ensuring flexibility

User-Friendly Implementation

- Simple setup with SDK access using just 2 lines of code

- Wide compatibility with popular LLMs, frameworks, and vector databases

Comprehensive Observability

- End-to-end visibility into the entire ML pipeline

- Traces and logs across framework, vectorDB, and LLM requests

- Trace tool for monitoring requests, detecting bottlenecks, and optimizing performance

Continuous Improvement Cycle

- Annotation feature for manual evaluation of LLM requests and dataset creation

- Automated LLM-based evaluations

- Built-in heuristic, statistical, and model-based evaluations

Performance Analysis Tools

- Playground for comparing prompt performance across models

- Metrics tracking for cost and latency at project, model, and user levels

FAQ

What makes Langtrace AI unique in the LLM observability space?

Langtrace AI stands out due to its open-source nature, advanced security features (SOC 2 Type II certification), and comprehensive toolset. It offers end-to-end observability, a feedback loop for continuous improvement, and supports self-hosting, making it a versatile and secure choice for LLM application monitoring and optimization.

How does Langtrace AI support different LLM frameworks and databases?

Langtrace AI is designed to be widely compatible with popular LLMs, frameworks, and vector databases. This broad support ensures that users can integrate Langtrace AI into their existing LLM infrastructure without significant modifications or limitations.

Can Langtrace AI help in improving LLM application performance?

Yes, Langtrace AI provides several tools for performance improvement:

- The Trace tool helps monitor requests and detect bottlenecks

- The Annotate feature allows for manual evaluation and dataset creation

- The Evaluate tool runs automated LLM-based evaluations

- The Playground enables comparison of prompt performance across models

- The Metrics tool tracks cost and latency at various levels

These features collectively contribute to continuous testing, enhancement, and optimization of LLM applications.

Is there a community support for Langtrace AI users?

Yes, Langtrace AI offers community support through:

- A Discord community for user interactions and discussions

- A GitHub repository for open-source contributions and issue tracking

These platforms provide opportunities for users to engage, seek help, and contribute to the tool's development.

Latest Traffic Insights

Monthly Visits

15.51 K

Bounce Rate

42.58%

Pages Per Visit

1.84

Time on Site(s)

23.95

Global Rank

1559694

Country Rank

United States 1211659

Recent Visits

Traffic Sources

- Social Media:12.60%

- Paid Referrals:2.15%

- Email:0.12%

- Referrals:7.44%

- Search Engines:37.70%

- Direct:39.87%

Related Websites

Feta helps product and engineering teams capture meeting context, automate post-meeting tasks, and focus only on high-impact work.

0

Alter: The seamless AI that enhances your Mac. Bypass the chat, perform instant actions across all applications. Boost your productivity by 10 times with full privacy control.

19.74 K

Create AI Tools without coding in minutes | TypeflowAI

Create AI Tools without coding in minutes | TypeflowAITypeflowAI enables users to create AI tools using dynamic forms and advanced prompts. Improve your SEO, boost traffic, and generate more leads by incorporating these tools into your website.

593

Briefy turns all kinds of lengthy content into structured summaries and saves them to your knowledge base for later review.

67.70 K

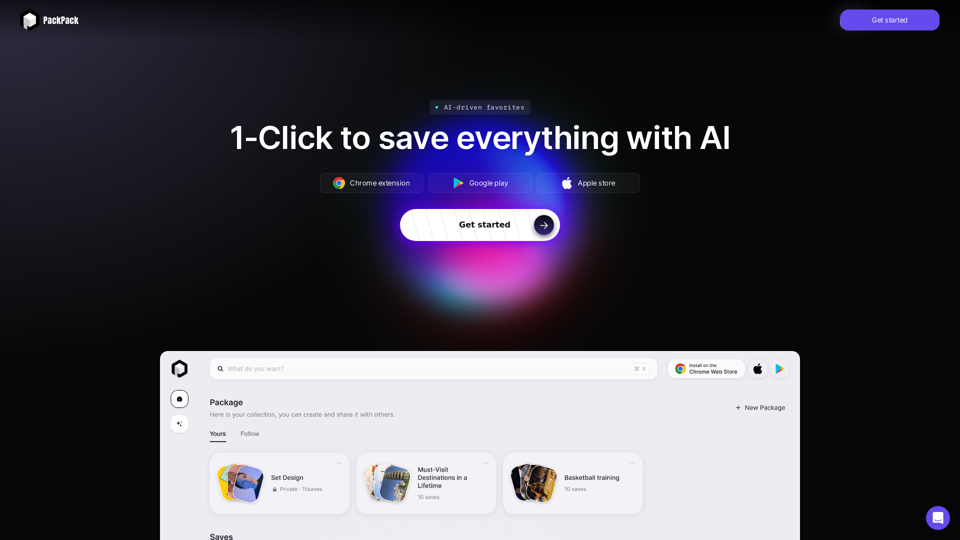

Save any webpage with one click and explore it with AI. Quickly get the main points with AI summaries and find new understandings with AI-powered question and answer.

47.24 K